Introduction

The 1st International Workshop on LLM App Store Analysis (LLMapp 2025), co-located with FSE 2025 at Trondheim, Norway, invites submissions that explore various aspects of large language model (LLM) app stores. This workshop aims to bring together researchers, industry practitioners, and students to discuss the latest trends, challenges, and future directions in LLM app ecosystems.

LLMs are trained on vast amounts of text data, allowing them to perform a wide range of natural language processing tasks. The advent of LLMs has opened up new possibilities for various applications, including chatbots, content generation, language translation, and sentiment analysis. As the capabilities of LLMs continue to expand, there has been a growing interest in making these models accessible to a broader audience. This has led to the emergence of LLM app stores, such as:

- OpenAI's GPT Store

- FlowGPT

- Quora's Poe

- ByteDance's Coze

- ByteDance's Cici

- ByteDance's Doubao

- Hugging Face's HuggingChat

- Zhipu AI's ChatGLM

- Baidu's ERNIE Bot

- Baidu AI Cloud's Qianfan

- Alibaba Cloud's Tongyi

- Tencent's Yuanqi

- Character.AI

- JanitorAI

- Minimax's Talkie

- Westlake Xinchen's Joyland

- Chub Venus AI

- Crushon.AI

These platforms provide a centralized marketplace where users can browse, download, and use LLM-based apps across various domains, such as productivity, education, entertainment, and personal assistance.

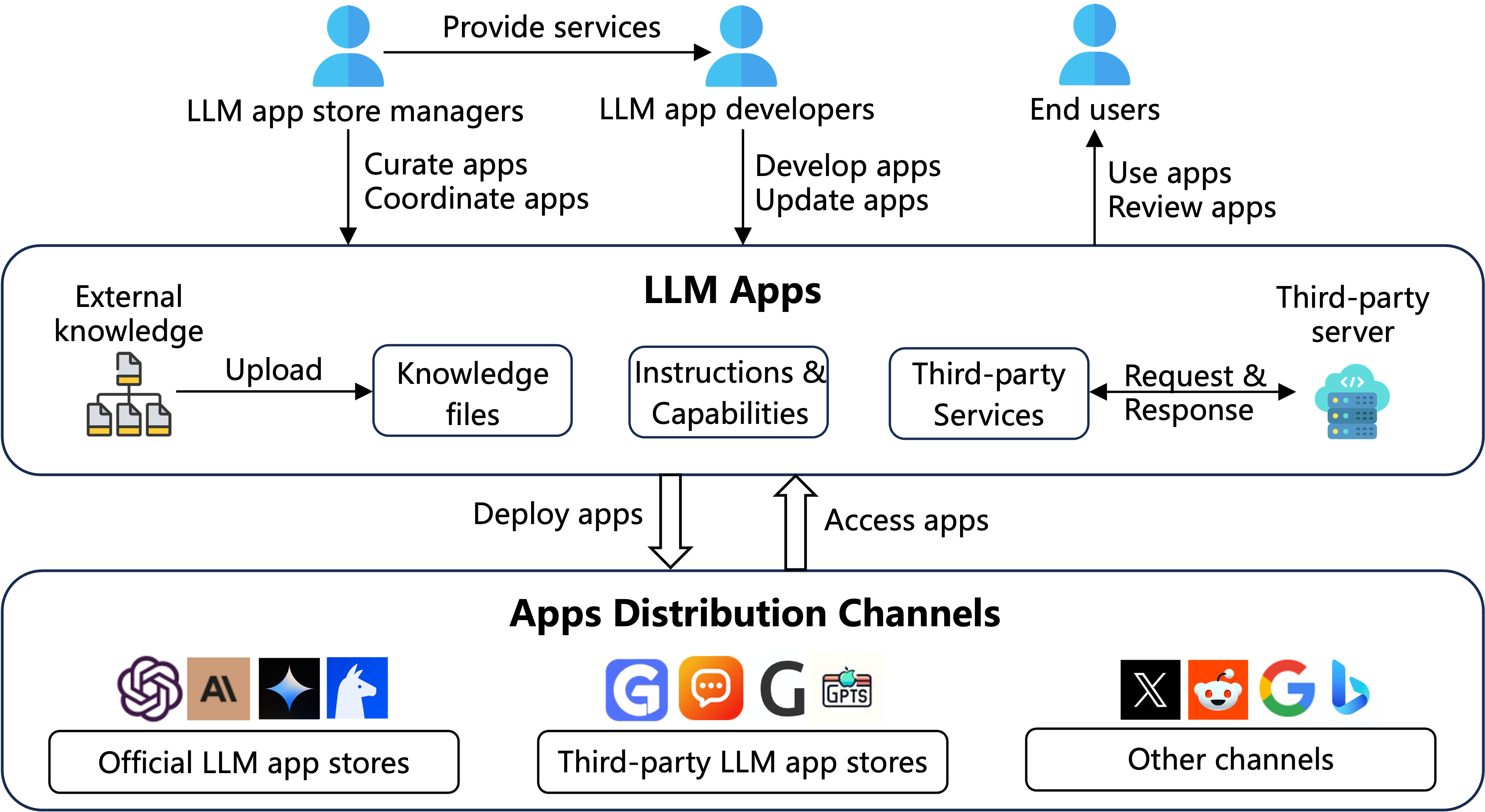

Definitions

- LLM app: A specialized application (app) powered by an LLM, distinct from conventional mobile apps that may incorporate LLM technology. These apps, typically found on platforms like GPT Store, Poe, Coze, and FlowGPT, are specifically designed to harness the advanced capabilities of LLMs for a variety of purposes, tasks, or scenarios.

- LLM app store: A centralized platform that hosts, curates, and distributes LLM apps, enabling users to discover and access tailored intelligent services.